The development of virtualization and cloud technologies, as well as the widespread use of agile development methodologies and DevOps practices, have created a new approach to software development – micro-service architecture (MSA – Micro-Service Architecture). While in the traditional model, individual modules can be heavyweight monolithic software, in MSA software is composed of many lightweight modules, each of which performs one simple function. This follows the principles of UNIX-like OS – “Do one thing and do it well”. The microservice itself is an isolated piece of code aimed at solving one problem. That is, the software development process turns into layering of “bricks” from which a full-fledged “house” is built, and “construction” is thus simplified.

In order to ensure the operability of microservices, to simplify their management, and most importantly, to isolate them from each other, they are “packed” into containers. A container is a shell that contains everything you need to successfully launch and operate a specific microservice. Several different containers can run under the same operating system, but each of them works with its own software libraries and dependencies. Failure of one container does not have any consequences for others, and it can be restarted almost instantly on another server in the cluster.

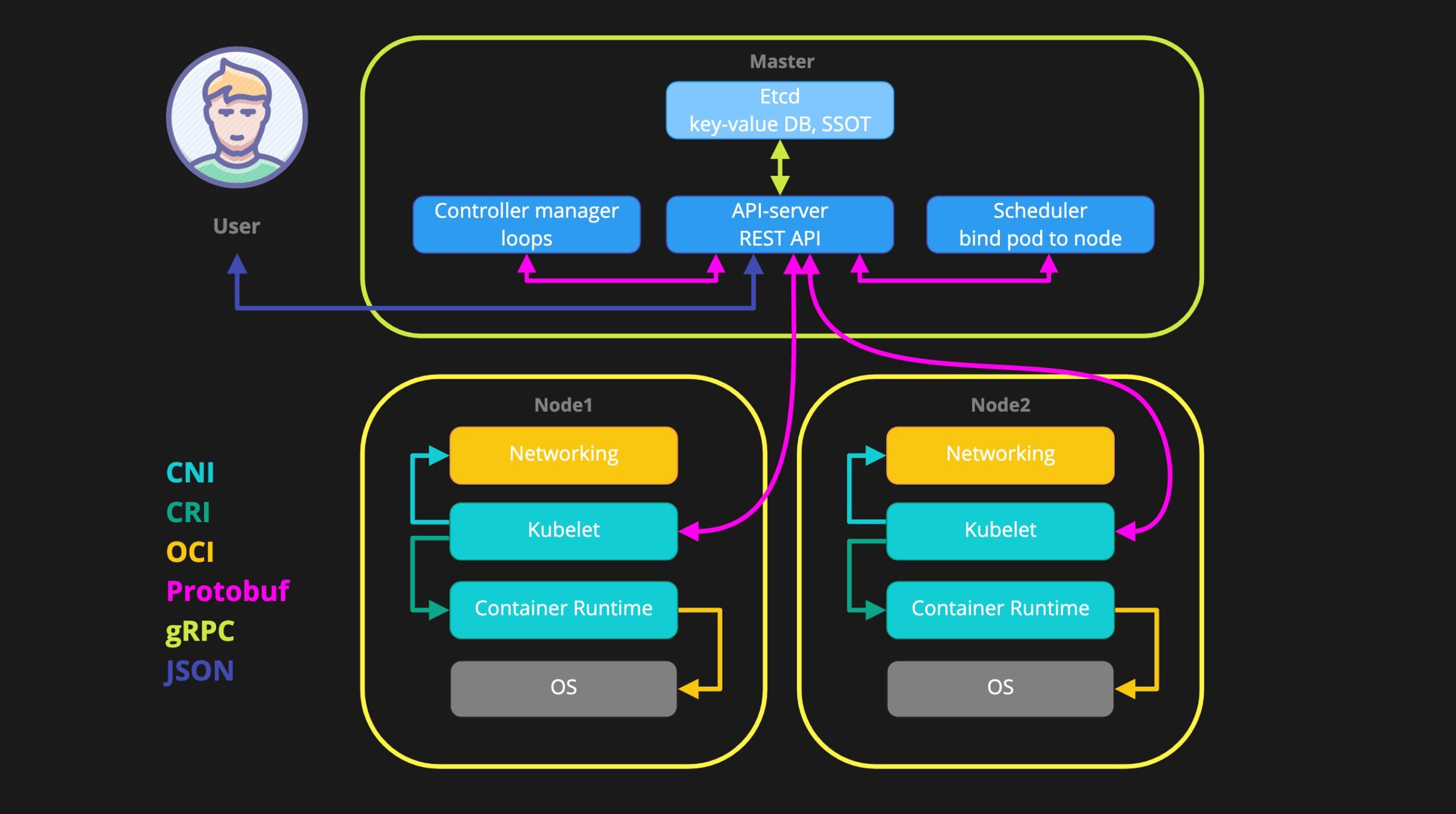

To optimize and automate the container management process, special software is used – an orchestrator.

World practice shows that when developing web applications today it is advisable to use a cloud-native approach using complex products for working with containers. Therefore, right now you need to think about how your company develops, implements and maintains all business systems. Today, Kubernetes has become almost the industry standard for launching containerized microservices – other players occupy only certain narrow niches, their percentage is very small.

A Kubernetes-based solution has become extremely popular over the past few years due to its wide customization capabilities. In addition, Kubernetes can be configured and run on almost any hardware platform: bare-metal, virtual machines, and in the cloud.

Where do legs grow from?

The word kubernetes in ancient Greek was used to describe the person who controls the ship. Through a graceful allegory, the essence of the technology “driving” containers is revealed. In 2006, long before Kubernetes existed, two Google engineers began work on the Control groups (cgroups) project. The solution emerged from the functionality of the Linux kernel itself, which makes it possible to isolate RAM, CPU, networking and disk I / O resources into a collection of processes.

The next step was to release Google’s own open source system called lmctfy (Let Me Contain That For You – let me contain this for you). The tool was supposed to be an alternative to LXC (Linux Containers) for working with application containers on Google servers. At that time, lmctfy, LXC and Docker were all in the same position in the market. To avoid this kind of overlap, Google halted all development of lmctfy in 2015. In the same year, the search giant published “Large-Scale Cluster Management at Google with Borg.” The code of the Borg internal project was made publicly available, further development led to widespread popularity – the project received the external name Kubernetes and since then has managed to become the de facto industry standard in container management based on Docker.

It is also worth noting that Docker has not remained the only possible tool for containerizing applications. In 2016, The Linux Foundation, together with major industry players, launched the Open Container Project (now known as the Open Container Initiative) for open standards for the container runtime. The project pursued the goal of uniting the competing Docker and CoreOS into a single standard. At the same time, Red Hat began building its own container launch solution, publishing it online as the Open Container Initiative Daemon (OCID). Even then, the project set itself the task of “implementing a standard runtime interface for containers in Kubernetes”, later called CRI-O – Container Runtime Interface. Kubernetes today supports both technologies. Developers have only benefited from this, having received a variety of free products and competition between containerized application runtimes.

Kubernetes is certainly not the only system with such capabilities, but it is this product that remains the leader today thanks to the wide support of the Open Source developer community. In addition, many vendors use Kubernetes to build proprietary systems based on it.